Italy’s data protection authority, Garante, has fined Replika, an AI chatbot company based in San Francisco, €5 million ($5.64 million) for violating user data protection rules.

The decision, made public on Monday, highlights how important it is for AI platforms to have strong privacy safeguards as regulatory oversight grows.

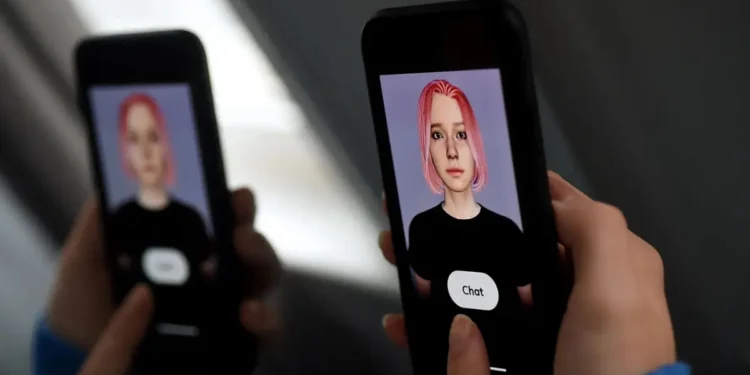

Replika, which launched in 2017, lets users chat with AI avatars meant to offer companionship and emotional support. But Garante found that the company processed user data without a proper legal basis and did not have effective age checks to stop children from using the service. This failure put vulnerable users at risk, especially because conversations with AI often involve sensitive information.

Italy’s strict action fits into a larger EU trend, where data privacy rules under the General Data Protection Regulation (GDPR) guide AI oversight. Garante’s probe into Replika is part of its wider effort to ensure AI systems comply with EU privacy laws, focusing on data collection, user consent, and protection measures.

This isn’t the first time Garante has taken strong action. In 2023, it briefly banned OpenAI’s ChatGPT and fined the company €15 million for GDPR breaches, showing that even top AI platforms face tough regulation, according to Reuters.

Earlier this year, in April 2025, Garante warned that Meta Platforms (owner of WhatsApp, Facebook, Instagram) would start using personal data to train its AI unless users opt out. And in January 2025, it sought information from China’s Deepseek about risks to Italians’ personal data.

The ruling against Replika sends a clear warning to AI developers: they must build strong data protection systems, especially around user consent, age verification, and limiting data collection.

As AI handles more sensitive data, regulators will likely increase scrutiny, pushing developers to follow privacy-by-design principles.

In short, Italy’s €5 million fine tells AI companies they must meet strict privacy rules or face serious penalties. Protecting user data and following regulations will be key to building trust and avoiding legal trouble as AI grows.